I've recently been doing some work to migrate a set of containerised services and apps from AWS ECS Clusters to a self-hosted Kubernetes environment - in part because of cost and in part to see how easy such a migration would be.

More about this migration in a future article.

As part of this I needed a solution to trigger deployment updates when a new version of a container was built - and I've documented my solution here.

Original Setup

My code lives on Github and I mostly used AWS CodePipeline & CodeBuild to rebuild containers when code changed and to manage the update of the running ECS services. I have several pre-built Terraform modules for deploying ECS Tasks and Services and for linking these to CodePipelines. You can see an example here https://github.com/richardjkendall/tf-modules/tree/master/modules/basic-cicd-ecs-pipeline.

My ECS cluster was fairly standard, consisting of a spot-fleet for cost managegment, fronted by an Application Load Balancer. I did use a custom solution based on haproxy for distributing traffic to tasks as they came online - this meant I did not have to touch ALB target groups or rules as my solution discovered the services and tasks using CloudMap service discovery. https://github.com/richardjkendall/haproxy

As part of my migration, I wanted to move away from using my haproxy solution and I also wanted to move from CodePipeline to Github actions - as find these are richer in functionality and free at my usage levels.

What I needed

As a result of moving away from CodePipeline which provided a 'deploy action' for ECS, I needed a way to update my Kubernetes deployments with the updated tags for the new images.

I could have exposed my control-plane API over the internet, which is theoretically safe as long as my private key remains secure, but this seemed like a bit of a sledge hammmer approach. So I instead decided to build a dedicated API which could just update the image against a single container in a single deployment.

API design

The API is very simple, adopting the following convention:

GET /deployment/[namespace]/[deployment]/[container]

Which returns a simple JSON document with the current image:

{

"Namespace": "[namespace]",

"Deployment": "[deployment]",

"Container": "[container]",

"Image": "[image]"

}Or a 404 Not Found error if the container, deployment or namespace cannot be found.

To update the image, the URI is the same, but it is now a post

POST /deployment/[namespace]/[deployment]/[container]

Where the body of the POST is a JSON document specifying a new image:

{

"Image": "[image]"

}Which returns the full details along with the updated image name/tag or an error if the document is not well-formed OR if the namespace, deployment or container could not be found.

Implementation

I chose Go to implement the API because there is an existing Kubernetes SDK/API client which makes the job much easier. You can find the code here:

https://github.com/richardjkendall/kube-update-api

There are some example manifiests provided to help with deploying this on a Kubernetes cluster.

It is designed to run 'in-cluster' which means it will pick up the credentials to access the Kubernetes control-plane API from the service account that the pod runs under. The tool does also support being run outside of a cluster, but this was just done for the purposes of building and testing it.

The tool needs access to the deployments API, which can be achieved using a ClusterRoleBinding similar to this one

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: update-api-cr

labels:

app: update-api

rules:

- apiGroups:

- 'apps'

resources:

- deployments

- namespaces

verbs:

- get

- list

- updateAnd if you are using version 1.24 (or newer) of Kubernetes then you'll need to generate the token secret for the account manually as it is no longer done automatically:

apiVersion: v1

kind: Secret

metadata:

name: update-api-sa-secret

annotations:

kubernetes.io/service-account.name: update-api-sa

type: kubernetes.io/service-account-tokenUsing it

The API does not implement any kind of authentication or checking, it expects to be behind some kind of proxy layer which is doing that. I often use my basic auth reverse proxy for this task, which is what I did in this instance. https://github.com/richardjkendall/basicauth-rproxy.

I've then exposed it via Cloudflare (I have an argo tunnel to my Kubernetes cluster), so I can call the API from Github actions to trigger deployment updates.

Here's an example of how I did that:

- name: Deploy to NP if on develop

if: github.ref == 'refs/heads/develop'

run: |

echo "{\"Image\": \"{regsitry}/{image}:${{steps.vars.outputs.tag}}\"}" > update.json

cat update.json

curl -X POST -H "content-type: application/json" -u "${{secrets.DEPLOY_USER}}:${{secrets.DEPLOY_PASSWORD}}" -d @update.json ${{secrets.NP_DEPLOY_URL}}Here all the usernames, passwords and URLs are hidden - but the procedure is simple, it creates a JSON file with the right structure and then posts that to the URL in order to trigger the update.

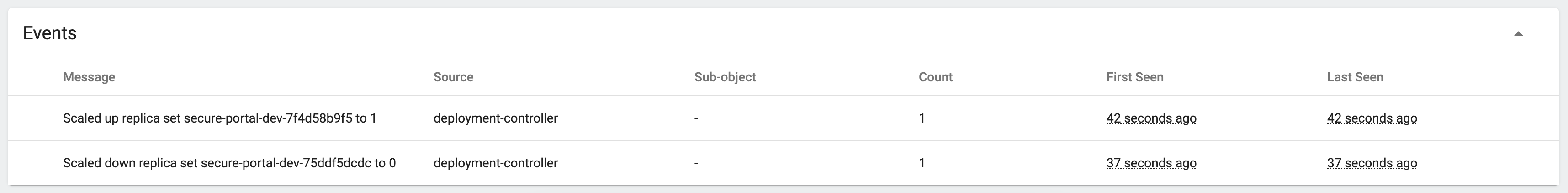

After this call is made you can see the update to the deployment, here's an extract from my dashboard:

-- Richard, Sep 2022